In the late 1990s, I attended a meeting with a startup healthcare software company that had built an impressive solution to costly hospital throughput challenges. A key investor was pushing hard for the company to incorporate a “Dot-Com” component into their on-prem solution. His reasoning had nothing to do with any consequential gains in functionality or customer benefits, or even development efficiencies. It was based entirely on his conviction that, in the then-current marketplace, a Dot-Com company was valued considerably higher than a “traditional” software company.

Since then, of course, the software industry can boast countless Dot-Com success stories. But that road is also littered with the carcasses of software companies that jumped into the craze before a clear and meaningful value proposition was truly established. Looking back now, we can see that—for many—the Dot-Com infrastructure, integration, reliability and user acceptance levels were too raw, and the value delivered to customers too low, to gain traction.

In some ways, we’re witnessing a similar phenomenon today with AI. Few are arguing against AI as a game-changing technology. But the AI hype machine—reminiscent of the Dot-Com days—is driving many software companies to rush AI features and functionality into their product. While the use cases are often compelling, for some the rationale seems to be “everyone is talking about it, so we better find an application for it.” Meanwhile, across the board, there is limited usage data and yet to be answered questions about the contextual understanding and accuracy of today’s AI platforms.

As was the case years ago, this is precisely the kind of environment where eager-to-grow software companies can make opportunistic decisions about pricing and value that lead to future problems. These generative AI pricing decisions made hastily can create long-term revenue challenges. I want to help you avoid that.

Critical pricing questions related to GenAI

| Questions | Implications of not clearly defining |

| What are the clear use cases and benefits that including AI will bring to customers? Do they apply across the customer base or are they specific to a subset? | Subpar revenue generation, poor customer account penetration, limited to no usage data |

| How will value be determined? How will it evolve over time? Will value be the same across different customer types or use cases? | Lack of account penetration limits insights, initial prices overshoot the market, subpar value capture |

| How should the new features be sold? Should they be incorporated into the existing SKU…form a new add-on SKU…become an entirely new product? | Confusing packaging, pricebook SKU sprawl, quoting complexity, missed upside opportunity |

| How dependent is the software’s value on the underlying AI platform’s accuracy? How will that value change as the AI platform becomes more reliable? What does that timeline look like? | Poor account adoption, churn, brand damage |

| How will the pricing account for these changes and align with the pace of value improvement? | Price to value misalignment, missed opportunity upside |

| What strategy is best for monitoring the value of the AI offering–an AI-augmented approach or a fully automated one? | Pricing errors, subpar value capture, loss of important analytics to drive future pricing improvements, blurring over of important customer nuances |

These are complicated questions with serious ramifications, and for companies anxious to capitalize on AI or counterpunch competitive AI initiatives it’s easy to rush through them or bypass them altogether. After making assumptions or relying on anecdotal info, many default to what they know: often, a usage-based pricing model (such as “tokens”) or charging an add-on fee for AI features.

At first glance, this approach makes sense. It aligns with familiar practices—for example, email platforms charging per message sent or premium versions of software that unlock additional features. But today, in the infancy of AI, when so much is unknown and evolving rapidly, establishing those positions prematurely—without sufficient supporting data—can ultimately hinder sales or restrict potential revenue.

Let’s play this out. Take a company that develops a valuable AI application to enhance its core software platform. The feature generates positive feedback in trials with existing clients, so the company decides to introduce it as an add-on. They set pricing at 20% of the monthly core software fee and offer current clients a first-year discount to 10%. With uncertainty around token load consumption, they also charge monthly fees for consumption beyond a limit included in the plan. They activate their marketing engine to tout the benefits and promote upgrades and new sales.

While this can be effective, particularly when there is a sufficiently compelling business case for the AI functionality, it contains several points of vulnerability. This generative AI pricing approach becomes problematic once deployed in the real world.

- At best today, LLM accuracy rates peak at around 83%, with some testing at 55%. Although even this level of performance can be highly valuable, some customers may question whether the upgrade is worth the cost. Others may wait on the sidelines until reliability is higher. Still others, intrigued by the potential, may investigate competitive options. Each will dampen sales success.

- Down the road, as accuracy improves, it may be hard to move customers far from the initial upgrade pricing, regardless of its increasing benefit, as the value has already been anchored.

- Customers often have little insight into their need for “tokens” or their ability to control their use, and charges based on consumption can introduce complexity and uncertainty, prolonging purchasing decisions. (Important note: Companies themselves can struggle to identify and control the token costs incurred by their app’s algorithms, often concealing a highly variable cost of goods sold.)

- Once a feature is promoted and sold as an add-on, it can never be more expensive than that of the main product, potentially capping market upside even if the add-on delivers transformational value.

How Real-Time Data is Being Used to Effectively Structure Pricing for AI Features

Alternatively, companies that first emphasize continuous real-time data gathering and analysis (for buyers, sellers and users) to underpin their go-to-market decisions can identify precisely how users gain value and—as LLM accuracy improves—pivot to pricing models that fully capture this value over the long-term. Here’s an example of how that looks:

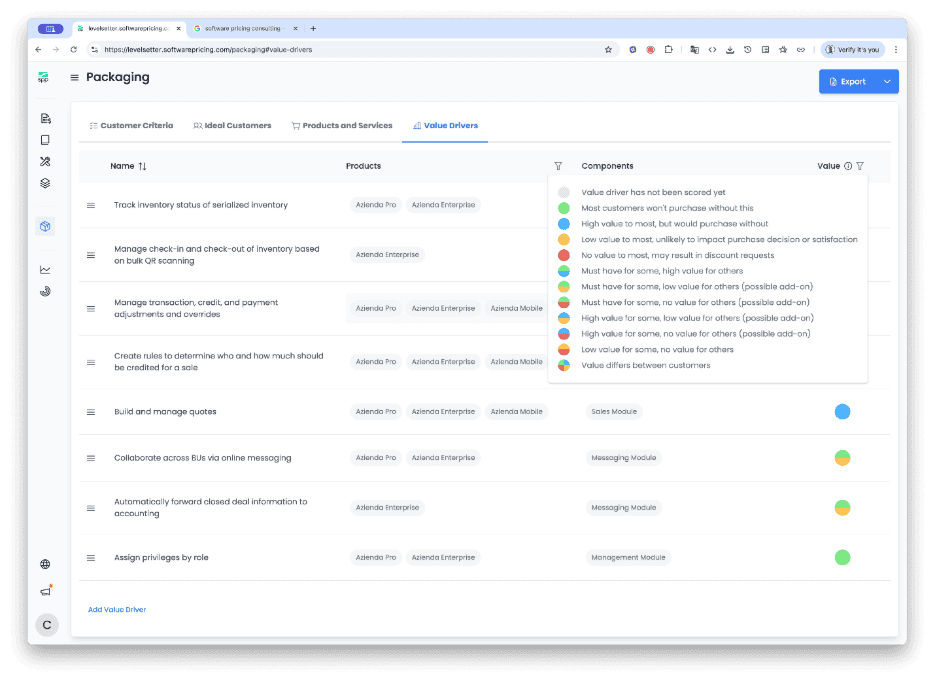

The above illustration shows how users perceive the value of various features that have been scored and validated with customers—i.e., customer “must-haves” vs. “like-to-haves”. Data like this makes it possible to accurately ascertain product value, more effectively attract ideal customers and better match offering packages to true customer perceptions.

While such insight is critical while setting pricing strategies, those serious about optimizing revenue in an environment moving as fast as AI must also pay similar attention to data throughout the selling and buying process. Answers to questions like these can be found in the data points:

- How are the new AI SKUs being presented to and received by customers?

- What journey did the buyer take to review this SKU? How did that impact their review of other company products?

- What pricing and packages were offered to the customer? Were discounts offered? What were the triggers for the discounts?

- How does the data change for different types of customers or use cases?

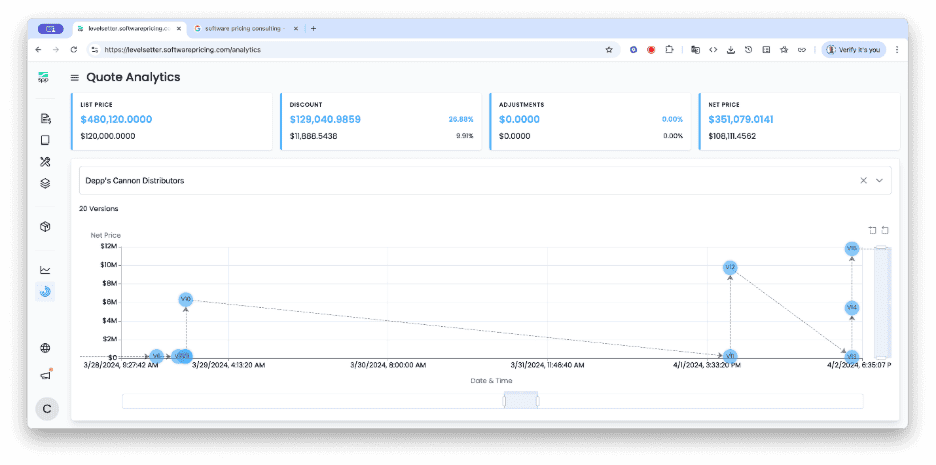

In the illustration above, data shows the progression of a single customer through their buying exploration, prior to a win or loss:

Data like this allows management to understand how buyers explore the company’s offering alternatives, revealing which offers the customer considers and the actual journey they take to configure their purchase. This enables factual, not assumptive, analysis of the company’s packaging and can uncover patterns that dramatically affect performance, like when pricing is a barrier or an accelerator to share of customer, retention and overall revenue. For example, the data may indicate that showing a higher tier edition, even though the prospect is not initially interested in it, can build higher rates of attachment to add-ons and services.

By accessing such real-time data, the company can validate or disavow assumptions, quickly iterate and test changes, simulate the impact of adjustments to licensing metrics or packaged offers and operationalize at scale. All of which reduces rollout risk and accelerates the time to fully capture upside

A Better Path to Optimizing the Financial Performance of AI-Driven Features

It’s not hyperbole to say AI will substantively impact every software company, just as it wasn’t twenty-five years ago at the onset of the internet. But as that experience taught us, rushing into an enormously dynamic and unsettled marketplace with pricing models based on assumptions and limited data can lead to costly mistakes. The availability of real-time data to guide critical pricing model decisions makes it much easier to choose—and sustain—the most profitable course.